Robots and Humans:

The Future of Collaboration

Marissa Ramirez de Chanlatte, Fellow

March 2023

Introduction

The movie A.I. Artificial Intelligence came out in 2001, and for many it was their introduction to the phrase “artificial intelligence”, and despite the evolution of the industry, the term “AI” will always be inextricably entwined with Haley Joel Osment’s Mecha humanoid robot. The media of the 90s and 00s were filled with these types of portrayals of the robotic future to come. I, Robot, The Terminator, Battlestar Galactica, and so many more depicted a robot filled future, and in most of these popular depictions, the robots took human form. The media was a reflection of the real excitement of the time. Those decades saw the birth of Boston Dynamics and the DARPA Grand Challenge. So twenty to thirty years later, are we any closer to this vision of the future?

I spent the last five months doing a deep-dive into the current state of the robotics industry trying to find out where all those robots that we were promised are. It turns out that the kids raised on the movies of the 90s and 00s are now trying to build the humanoids they grew up with, but all the money and publicity devoted toward those efforts obscure some of the more exciting things happening in the space. Advances in computational power and the deep learning revolution have enabled rich perception and understanding of the world. We can use those advances to make robots more like humans, but also to broaden our sense of artificial intelligence beyond the human form. As roboticist and co-founder of iRobot, Rethink and most recently, Robust.AI, Rodney Brooks, wrote “It is unfair to claim that an elephant has no intelligence just because it does not play chess.”

In our deep-dive, we evaluated companies creating robots that go beyond current human capabilities doing everything from navigating the deep ocean to the inside of the human body. In this whitepaper, I will lay out the current state of robotics technology, the trends we have observed, and what makes now a particularly exciting time to invest in robotic technologies.

Why Now?

Labor shortages are facing almost every industry, meaning robots are not wanted primarily to replace workers, but just to meet demand. These shortages affect a diversity of industries, from logistics to service work and elder care, meaning that there are a wide variety of tasks that need automation. Some of these tasks are still far away from being reliably performed by robots, but many that seemed far off a decade or two ago are now possible thanks to new technological advances.

Tesla’s FSD labelling at work. Image Source: Tesla

We are no longer working with your parents’ computer chips. Not only has compute density increased,[1] allowing far more complicated instructions to be processed on the robot itself,advances in cloud technology and edge computing[2] give robots the ability to more rapidly communicate with each other and access the level of computation infrastructure necessary to process the rich information they are taking in.

Sensors are also playing an important role here. Not only are traditional sensors, such as LiDAR getting cheaper,[3] more sensing options are available.[4] Significant advances in computer vision allow a camera to provide more information than ever before. For the robots of yesteryear, perception often meant obstacle detection, but now, not only can we detect the existence of obstacles, we can categorize them semantically, reconstruct them internally, share their location with other robots in the fleet, predict their movement, and so much more.

The combination of algorithm development plus increased compute power to run those algorithms means that we are that much closer to robots being able to sense the world in ways similar to humans, if not in some ways superior to humans.

Form Factor

Robots in the popular imagination have taken many forms, but sci-fi depictions skew toward the humanoid. Science fiction has a hold on the popular perception of robots, as can be seen by the popularity of Boston Dynamics videos or Elon Musk’s imagining of Optimus. But how practical are humanoids and how far away are we from humanoid robots providing a meaningful percentage of labor?

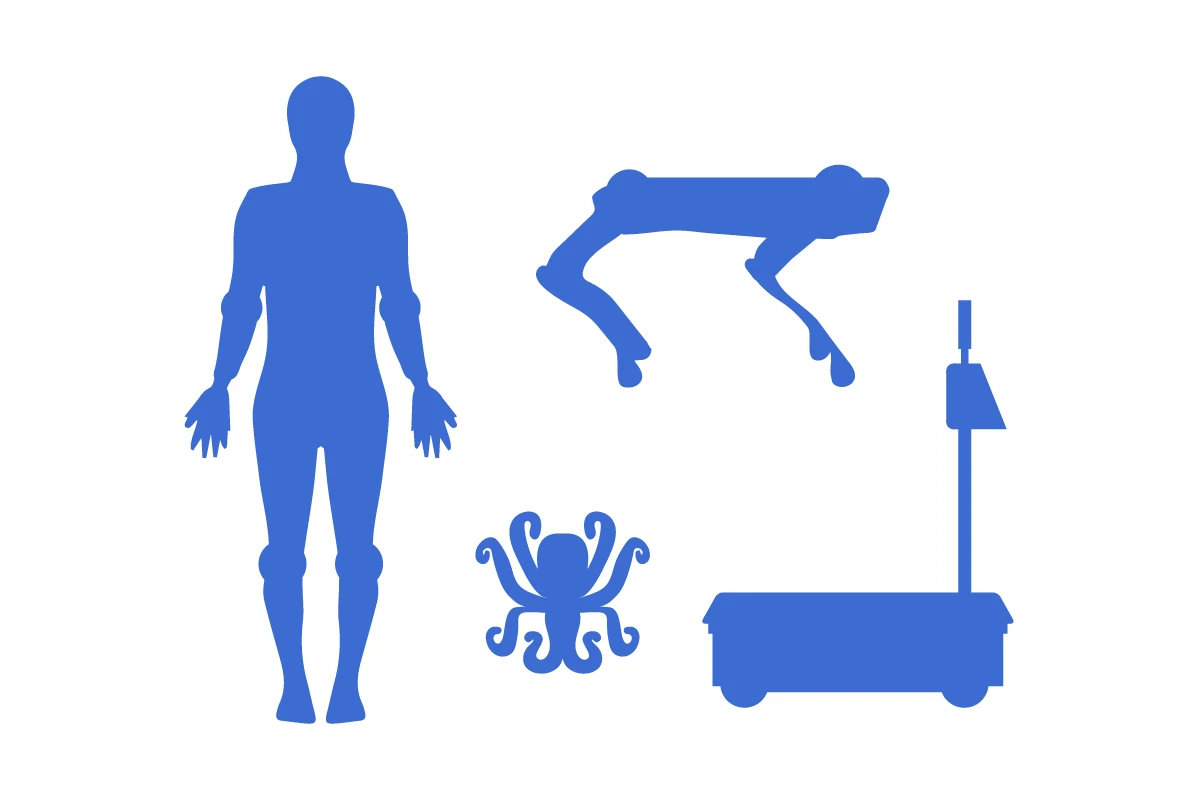

Various robot form factors (Figure AI's 01, Boston Dynamics' Spot, Wyss Institute's Octobot, Robust AI's Carter)

In my opinion, humanoids are still a sci-fi dream. Counterintuitively, it is much easier to get a humanoid to land a backflip than it is to build one that can safely and reliably do an everyday task such as walking over to a bin, rummaging through it, and picking a specified object out of it.

Humans and robots do not share the same strengths, and we have much higher expectations of robots’ skill when performing tasks that are easy for us.

A backflip is impressive because most of us can’t do one, but when it comes to something like catching a ball or opening a door, our expectations are much higher. A robot that can land a backflip on one out of ten tries is impressive, but a robot that can open a door only one out of ten times is a failure, and neither are reliable enough for any industrial application. This is one of the many reasons why I believe chasing after humanoids is not the fastest way towards widespread robot adoption. Going after tasks that are difficult for humans is a better first step.

An often heard argument for humanoids is that the world is made for humans, so a humanoid robot will be the best option to navigate it. I find fault in this premise. By geography, not only is most of the world not designed for humans, it is not even habitable by humans, yet we still have great operational needs in these harsh environments. Think of underwater maintenance of infrastructure, mining battery metals, logging in forests, or drilling for oil. None of those spaces are friendly towards the human form and they are all beginning to see plenty of robotic innovation. Even the human body itself is not friendly towards humans operating on it. A human surgeon only has two hands and limited vision, so we wouldn’t imagine a humanoid robotic surgeon. We want scalpels not hands and we want a form factor small enough to navigate the inside of the human body.

Now if we are considering only the built world, I would still argue that many of our spaces are not designed for humans. Much of our outdoor space is designed for cars, and a lot of indoor space, such as warehouses or factories are not easily navigable for humans without the aid of tools and machines. Just as we would rather create an autonomous vehicle instead of designing a humanoid robot to drive a normal car, it wouldn’t make sense to have a humanoid drive a forklift. Warehouses are filled with human augmentation devices in the form of ladders, carts, conveyor belts, etc, so instead of pouring millions of dollars into a humanoid that can climb ladders, why not design a new form that can easily reach without needing the tool at all?

The slice of our world that is designed for humans are the spaces that most of us spend the majority of our time: homes, restaurants, office buildings, shopping malls etc. Yet because those spaces are designed for us, they introduce a different problem: us. Humans are unpredictable, constantly moving around, which is exactly why public spaces are not the ideal place to first introduce humanoid robots. Bipedal humanoid robots in their current state of development are not safe to operate in spaces with large numbers of untrained, unaware humans. No one wants a 200lb hunk of metal running into them, or worse experiencing a power failure and falling on them.

The field of soft robotics[5] is growing rapidly, addressing many of these safety concerns and creating grippers that can touch and grab without damaging objects, yet most of this innovation is not geared towards the human form. There are a plethora of novel gripper shapes[6] and many animal inspired forms such as fish[7] or octopi.[8]

This is not to say that there is no value in the human form, just that it is often overstated and brings challenges when it comes to commercialization. The humanoid form will continue to capture the imagination of entrepreneurs and roboticists for a long time to come, and I look forward to seeing their continued development, but the rush to bring them to market could paradoxically hinder true technological progress. The promise of a humanoid is having a general purpose robot that can do any task a human can do. In their rush to bring this immature technology to market, many companies are taking shortcuts and overfitting to a single task or environment, undermining the goal of generality.

We must not let form factor bias our view of robots’ capabilities. Just because a robot looks like a human does not mean it can act like one, and just because a form factor is familiar, doesn’t mean the technology is simple. What I am most excited about are the form factors that do what humans cannot do by themselves, such as mining at the bottom of the sea or navigating through the human body, and those that take the form of the tools that we already know and love, but supercharge them to augment our abilities in an approachable way.

Perception

Humans perceive the world around them primarily through audio sensors (ears), visual sensors (eyes), touch sensors (skin), and smell sensors (nose). Robots are working on catching up to human sensing abilities in those areas, but also have access to sensors that go far beyond human capabilities. Similarly to form factor, I believe that simply mimicking human sensing is not the right approach - we will almost always fall short. Leaning into the strengths that differentiate robots from humans, such as the ability to put visual sensors anywhere you’d like or measuring depth perfectly, is what will maximize value. That being said, perception technologies have come a long way towards mimicking human perception, and these advances are a large part of what makes now such an exciting time for robotics.

Robot as a service dog

Vision

In conversation after conversation with startup founders, when we ask what is enabling you to bring this technology to market now, the answer is more often than not vision algorithms. Deep learning has caused an explosion of progress in the field of computer vision. From images and video we can very reliably detect and classify objects.[9] We can also create 3D maps[10] of our environment and use those maps for simulation and training or for navigation and localization. However, there is still significant work to be done on making those 3D maps more efficiently and reliably and moving beyond seeing to understanding.

Touch

Touch is an important human sense that researchers haven’t quite cracked yet for robots, although there is an exciting amount of momentum. Think of the seemingly simple human task of rummaging through a box of items to pick out the one you’re looking for. As humans, we would use our eyes, but also rely heavily on our sense of touch to distinguish one object from another. As of today, I have been unable to find a company that is doing this successfully with touch, but I am very excited about the research that is out there and do think this may be the next major breakthrough in perception. Researchers at the University of Edinburgh[19] and CalTech[11] are developing electronic skins with temperature and pressure sensors, but these technologies are not yet ready for production. Another angle to approach touch is considering how a robot communicates back to a human what it is “touching” in telepresence situations. Researchers at Case Western[12] are developing haptic solutions for virtual touch that are a lot of fun to play with, but also may take a little while to trickle through to the product. The immaturity of sensing through touch makes me very wary of anyone claiming their robots can complete a task by behaving like humans (imagine trying to do any of your daily tasks without a sense of touch!), but I am excited by the progress and hope to see these technologies start to make their way into products in the next five to ten years.

Audio

Audio sensing seems to be fairly neglected in commercial robotics, save for the Alexa/Siri-style voice commands (we did see at least one Alexa-enabled autonomous mobile robot at CES), and perhaps for good reason. A lot can be accomplished with just vision and touch, and I haven’t encountered many use cases where audio is absolutely essential. However, I will take this section to highlight one of the integrations of audio that I find the most interesting. Dima Damen’s group at the University of Bristol put together the Epic-Sounds dataset,[13] one of the largest datasets with audio-only labels, allowing for researchers to train sound recognition, using audio to distinguish everyday tasks, potentially augmenting sensing capabilities.

Smell

Smell is a less often considered sense when talking about robotics, but it is a great way to demonstrate how we already alter our environment to more easily work with human sensors and the ways in which we have long used non-human sensors to augment our perception. We use smell in tasks like cooking, but it is primarily our aversion to bad odors that springs us to action. A dirty diaper calls us to change it, and we avoid foul smelling meat. This sense is so ingrained that we add a foul odor to naturally odorless gas to warn folks of a gas leak. Robots can be equipped with alternative sensors so that we don’t have to rely on our sense of smell to detect danger. Sensors can automatically detect not only hazardous gasses,[14] but radiation[15] or even disease.[16] Smell is also a great example of our long history of using non-human sensors to augment our perception capabilities. While human smell is limited, canine small is famously strong. For centuries we have used dogs as aids in our perception, and viewing robot perception as the computer-age analogue to service dogs, is a good way to frame the potential of robotic sensing.

Human Robot Interaction

Previous decades of automation have consisted of complete retrofitting and replacement. Think of busy factory floors that used to be filled with humans that are now humming away with perfectly efficient machines. However, this is not the way of the future. Tasks that are easy to automate have already been automated, and surprisingly that’s a relatively small share of tasks. More than 80% of warehouses have no automation at all [20]. There are many reasons for this including that some tasks that are difficult to automate or that most automation is fairly inflexible. More and more, people are looking towards human-robot teams to solve these problems. When a robot hits an edge case or has a step it can’t complete, it can pass it on to the human, making for a more flexible and robust system. I believe this is not just the answer for the near term as we try to improve technology, but will be the superior strategy in many cases for decades to come.

For many years chess was thought of as the pinnacle of intelligence, but since 1997 when Deep Blue beat Garry Kasparov, computers have reigned supreme. However, in the first “freestyle” chess tournament in 2005 that allowed humans, computers, and human-computer teams, it was the hybrid human-computer team that won.[17] As we’ve seen time and time again, humans are strong in areas that computers aren’t and vice versa. The combination of the two is stronger than either by itself. We can imagine a future where AI “writes” a news article, but a human provides prompts, edits, and fact checks. In the case of embodied AI, a robot moves around a warehouse floor, but a human does the grasping. We can already see this happening with autonomous driving. Fully autonomous driving always seems just out of reach, but our cars are getting more autonomous every day. We may still be “driving,” but cars are providing more and more feedback, detecting obstacles, automatically breaking, lane keeping, and even parking! Together, the human-vehicle team raises the bar for safe driving, making it even harder for the fully autonomous vehicle to catch up.

While I predict we will see more and more of these human-robot teams in all industries, introducing robots to human spaces brings new challenges. Number one is safety. Robots that are heavy and/or move quickly can be serious safety hazards, especially if their failure mode when they lose power is to simply topple over. Choosing form factors that reduce safety risk is very important when operating in human spaces. It is also not enough for the creators of these robots to know they are safe, the humans the robots are interacting with must trust them as well. There are questions here of design (how do you make a robot look approachable and trustworthy?), but also serious technical challenges in communication (how does the robot let the human know what its purpose, limitations, needs, etc. are?) and understanding (how does the robot model and understand the human?). I believe the biggest barrier to widespread adoption of robots in human spaces is that no one has yet made a robot that people enjoy working with.

There are many who are thinking carefully about this problem. One of my favorite thinkers on this subject is Kate Darling from the MIT Media Lab who posits that we should think of robots like service animals. She writes, “Comparing robots to animals helps us see that robots don’t necessarily replace jobs, but instead are helping us with specific tasks, like plowing fields, delivering packages by ground or air, cleaning pipes, and guarding the homestead.”[18] Robots are programmed to have autonomous mobility and decision-making capabilities, so they are more than just tools, yet not quite “co-workers.” The service animal framework provides a nice approach to create an approachable and useful robot that augments rather than replaces human skills.[21]

Areas To Watch

Factories and warehouses are getting a lot of attention from robots these days. They are an easy entry point to working with humans, because there is a lot of commercial potential yet the environment is relatively controllable. Other areas I’m excited about are places where humans currently have difficulties operating: deep sea, outer space, inside the human body.

We also talked to quite a few agricultural robotics companies. Robotic tractors are a great place to deploy autonomous vehicle technology because there is again great commercial need, yet a relatively standard and controllable environment (no pedestrians, straight rows). Harvesting, on the other hand, is a much more challenging task, and we had difficulty finding robots that could handle a wide enough variety of harvesting tasks to be commercially tractable.

Ultimately, that combination of high commercial value and relative environmental control are the markers of spaces that are ripe for robots. We want to maximize value-add while minimizing complexity and variation in the environment. Public spaces with too many different people and activities provide too much variation and safety risk to be a good testbed.

Conclusion

With the advances in compute power and perception, this is a very exciting time to be in robotics, but it is also important to not get ahead of ourselves. While advances in AI can provide some pretty convincing demos, it is very important that we don’t see an impressive display of one aspect of human behavior and then extrapolate that all other types of human behavior are easily attainable. A robot might be able to do a backflip or have a conversation that passes the Turing test, but it doesn’t necessarily mean it can do something as seemingly “simple” as reliably and safely walking over varied terrain.

It makes the most sense to lean into what robots are best at and allow humans to excel in their own domains. The next few decades will be all about human-robot collaboration, and the winners will be the folks who figure out how to best pair humans and robots together for maximum potential.

Landscape

Warehouse & Manufacturing

Robust.AI

Locus Robotics

Covariant

Ambi Robotics

Amazon (Kiva)

Agriculture/Forestry

John Deere (Blue River, Bear Flag)

Kodama

Underwater Mining

Impossible Metals

Surgery

Endiatx

Remedy Robotics

Food Manipulation

Soft Robotics

Chef Robotics

Humanoids

Agility

Tesla

Figure

Boston Dynamics

1X (Halodi)

References

[1] Thompson, Neil C., Shuning Ge, and Gabriel F. Manso, The importance of (exponentially more) computing power, arXiv preprint, arXiv:2206.14007, 2022

[2] Solminski, Jon, and Brad Bonn, How Boston Dynamics and AWS Use Mobility and Computer Vision for Dynamic Sensing, AWS Robotics Blog, Amazon, October 2021

[3] Saracco, Roberto, LiDAR Is Getting Cheaper, IEEE Blog, IEEE, July 2019

[4] Chris Teale, Is LiDAR a ‘fool’s errand’?, SmartCities Dive, SmartCities Dive, May 2019

[5] Soft Robotics, Research, Harvard Biodesign Lab

[6] Soft Robotics

[7] R. Katzschmann, J. DelPreto, R. MacCurdy, A. Marchese, and D. Rus, SoFi - The Soft Robotic Fish, Research, MIT CSAIL, December 2018

[8] C. Laschi, Robot Octopus Points the Way to Soft Robotics With Eight Wiggly Arms, Spectrum, IEEE, August 2016

[9] Vipul Kumar, How to Detect Objects in Real-Time Using OpenCV and Python, Towards Data Science, November 2020

[10] Charalambos Theodorou, Vladan Velisavljevic, Vladimir Dyo, and Fredi Nonyelu, Visual SLAM algorithms and their application for AR, mapping, localization, and wayfinding, Array, Volume 15, 2022, 100222, ISSN 2590-0056

[11] Yu, You, et al, Biofuel-powered soft electronic skin with multiplexed and wireless sensing for human-machine interfaces, Science robotics 5.41 (2020): eaaz7946

[12] Jacobs, Paul, Michael J. Fu, and M. Cenk Çavuşoğlu, High fidelity haptic rendering of frictional contact with deformable objects in virtual environments using multi-rate simulation, The International Journal of Robotics Research 29.14, 2010: 1778-1792

[13] Huh, Jaesung, et al, Epic-Sounds: A Large-scale Dataset of Actions That Sound, arXiv preprint arXiv:2302.00646, 2023

[14] Autonomous Gas Detection with Robot and Falco PID Detector, ION Science ION, September 2021

[15] Pavlovsky, Ryan, et al, 3-D radiation mapping in real-time with the localization and mapping platform LAMP from unmanned aerial systems and man-portable configurations, arXiv preprint arXiv:1901.05038, 2018

[16] De Silva, Thushani, et al, Ultrasensitive rapid cytokine sensors based on asymmetric geometry two-dimensional MoS2 diodes, Nature Communications 13.1, 2022: 7593

[17] Half-Human, Half-Computer? Meet the Modern Centaur, Blog PARC

[18] Kate Darling, Robots are animals, not humans, Wired, April 2021

[19] Hu, Delin, et al, Smart Capacitive E-Skin Takes Soft Robots Beyond Proprioception, 2022

[20] Global Warehouse Automation Robots, Technologies, and Solutions Market Report 2021-2030, Business Wire Berkshire Hathaway, June 2021

[21] BC2, Takayama, L., Toward making robots invisible-in-use: An exploration into invisible-in-use tools and agents. In Dautenhahn, K. (Ed.), New Frontiers in Human-Robot Interaction, Amsterdam, NL: John Benjamins, 111-132, 2011